Design

Jake Ryland Williams

Assistant Professor

Department of Information Science

College of Computing and Informatics

Drexel University

Some themes

Data science projects are prone to failure or non-adoption,

but design-thinking strategies can help to avoid hazards.

Knowing the audience and their needs before getting in deep

is one of the surest recipes for success,

along with experimental iteration, incremental improvement,

and collaborative optimization with UX designers.

What do we mean by design?

With the range of components in data science applications

thoughtful design can impact success at various stages.

We've touched on a few points of design already, like

data schemas, determining what data is upon collection;

storage systems, determining data access and usage;

and depiction, determining how results are communicated.

Function and utility of systems are crucial to success,

but whether an outcome is a product, thesis, or decision,

subjective human components often surround success,

which is where thoughtful design comes in once again:

user uptake through empathy and optimization of experience.

Designing for the unknown

Initiating a point of data collection sets precidents;

choices over what data to collect and from whom

have downstream effects, oftentimes unexpected.

If unsure about the usefulness of some data dimension,

don't discard it to find out later it's missing,

even if it means more complexity on the front end.

However, data science is often an iterative process,

i.e., expect to loop back and adjust systems frequently.

Whether to improve user experience or fix a struggling system,

a thoughtful data scientist might consider oneself a steward.

So many ways to fail

Despite hype and high expectations DS is often unsuccessful.

While failure hazards can be out of a scientists' control,

like a company's slowness to adopt tech-inspired change,

avoiding bad practices can help ensure success.

- A few common pitfalls include:

-

jumping into a favorite or comfortable solution,

-

behaving condescendingly as a DS "unicorn,"

-

working in a silo,

-

reinventing existing research,

-

not prototyping,

-

not thinking ahead to production,

-

using uninterpretable algorithms,

-

and ignoring emotional factors.

Who/what is a customer, or user?

Customers/users are all important to data scientists,

and the term suggests one who has adopted a system.

But data products are not exclusive as DS outputs,

e.g., analysis might inform a business decision,

or a report might target publication or patent,

and there are analogs of "user" in each of these,

namely the decider of a decision or the reviewer of a journal.

These are the users/customers/adopters in these contexts,

and there may be a number of them present as stakeholders.

So successful data science needs be throughtfully aware,

targeting interests through empathy and effective communication.

Whatever a data science output may be,

there's opportunity in shaping design for a target audience.

Designing for users

In an upcoming reading: Design Thinking for Data Scientists

George Roumeliotis (Intuit) provides salient wisdom:

"Fall in love with the customer problem,

not a particular solution."

- and discusses "deep customer empathy," e.g., one should ask:

-

What is your role in the project?

-

What problem should this project be solving?

-

What is the rationale for this project?

-

What are your hopes and concerns around this project?

-

What data, systems, and processes should this project consider?

-

What criteria would you use to evaluate a proposed solution?

-

What criteria would you use to evaluate a deployed solution?

What's the plan?

Knowing the target audience helps to frame the problem,

but our focus is data science; we are still beholden to data.

So the importance of EDA remains as true as ever,

and an integrated motto might be something like:

"Know the customer's needs as deeply as the(ir?) data's potential."

Data modeling benefits from design thought too.

Widely-available, open-source code, allows a broad view

i.e, "broad to narrow" model consideration is feasible,

since the programming onus is largely lifted from us.

However, brainstorming should generate a bulk of ideas,

and judgment be withheld until the many are available,

and their merits and drawbacks can be compared,

rendering a "narrow" few for final review.

Data products

Data science often results in reports and decision support,

but data products might have popularized the field the most.

Recall examples: google search, amazon recommendations,

uber routing, Yelp! ratings, mobile text completion, etc.

For all, munging, analysis, and modeling are necessary,

but the interface people use arguably has the most impact,

and has design components outside of the underlying "science."

So, while data science often happens as a "team sport,"

with front end design not directly done by the data scientists,

knowing the concerns surrounding data products and design,

can help scientisits contribute critically towards success.

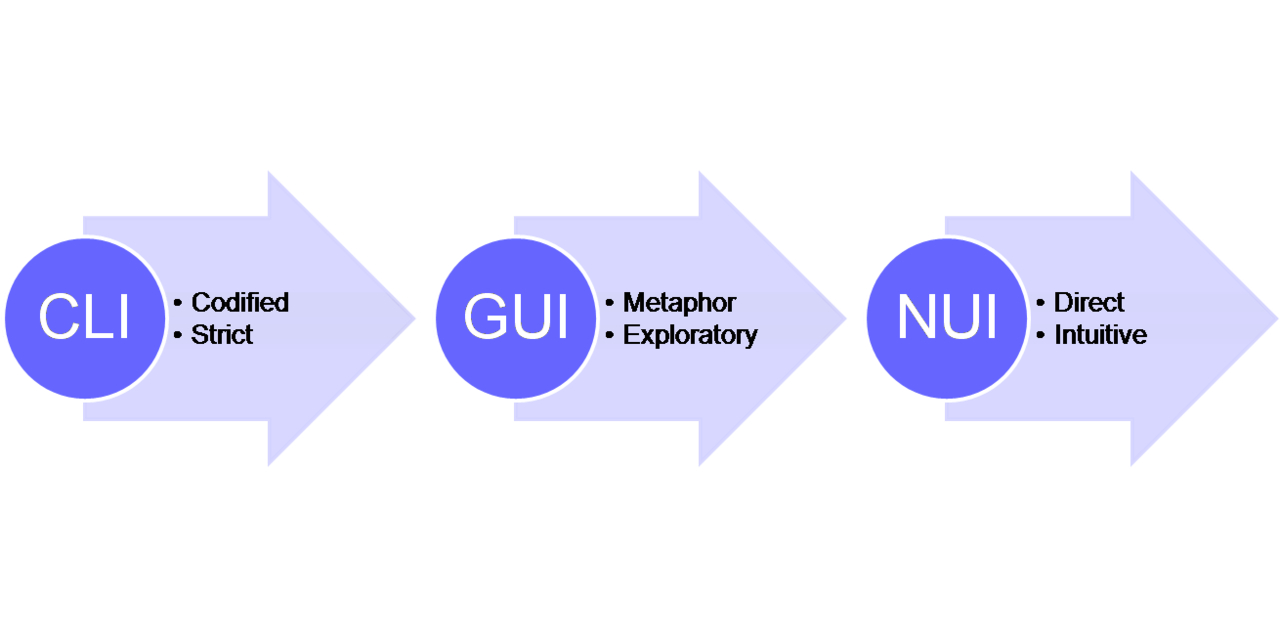

User interfaces

User interfaces enable contact between people and machines,

and so have the ability to make or break data products.

However, an interface is a medium used to deliver a service

and not the service itself—that is the data science output,

which is necessarily constrained by the user interface.

A general issue with interfaces is user training,

which should be made easy/comfortable for user adoption.

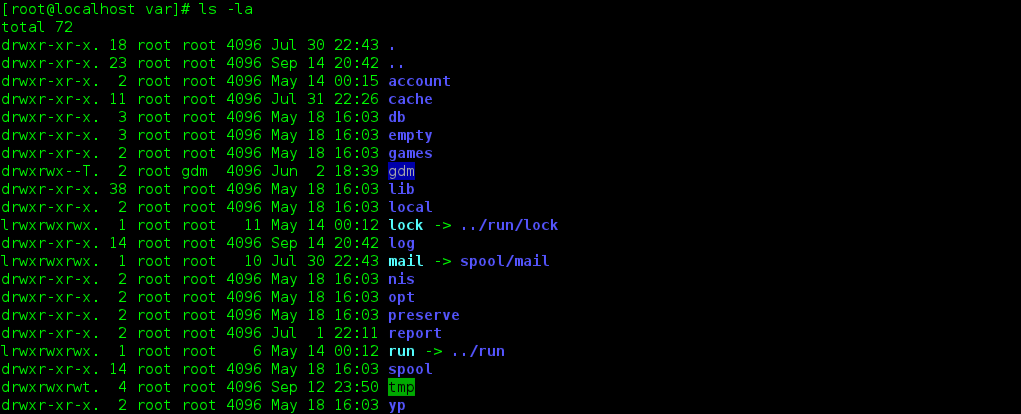

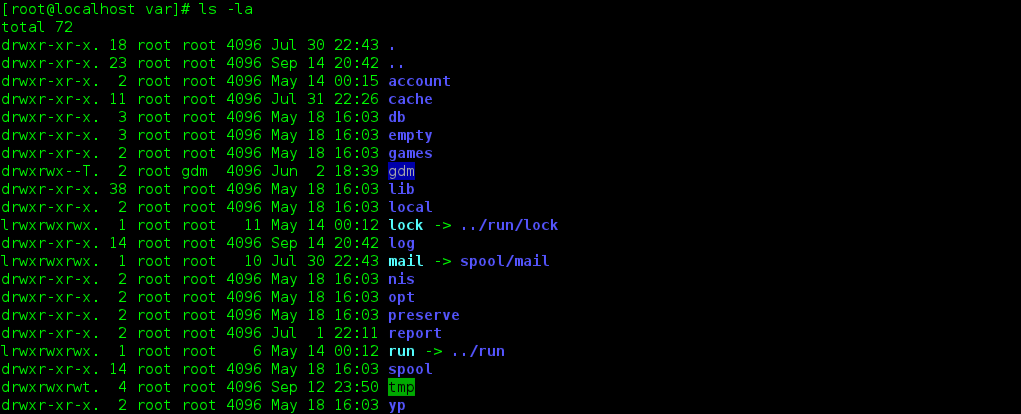

Command line interfaces

Command line interfaces (CLIs) are low level and text based,

whose resulting light weights are suitable for development.

With CLIs, commands are executed in sequence after one another,

or organized in a script and then executed

to render a result that occurs in memory or on some file system,

with progress often not visable until after process completion.

CLIs are strict, in that they have very specific syntaxes.

Command line interfaces (CLIs) are low level and text based,

whose resulting light weights are suitable for development.

With CLIs, commands are executed in sequence after one another,

or organized in a script and then executed

to render a result that occurs in memory or on some file system,

with progress often not visable until after process completion.

CLIs are strict, in that they have very specific syntaxes.

Graphical user interfaces

Graphical user interfaces (GUIs) provide visual feedback,

making them more suitable for customers and product delivery.

GUIs rely on visual metaphors like "desktops" and "bottons"

that sit in front of more abstract or terse CLI commands,

which are hidden from view of the user who interacts.

GUIs are more user friendly than CLIs,

trading off slowing interaction for decreased training time.

Graphical user interfaces (GUIs) provide visual feedback,

making them more suitable for customers and product delivery.

GUIs rely on visual metaphors like "desktops" and "bottons"

that sit in front of more abstract or terse CLI commands,

which are hidden from view of the user who interacts.

GUIs are more user friendly than CLIs,

trading off slowing interaction for decreased training time.

Natural user interfaces

Natural User Interfaces (NUIs) are an intuitive extreme

and minimize user training with the removal of metaphors.

NUIs, ideally, are invisible to users,

e.g., the "touch" part of touchscreens is a NUI,

since electronic response to touch is intuitively physical.

Other examples include motion-tracking video games,

natural language understanding (NLU) systems (like Siri),

and virtual reality headsets; even some robots may be NUIs!

As technology advances, we'll likely see more and more NUIs.

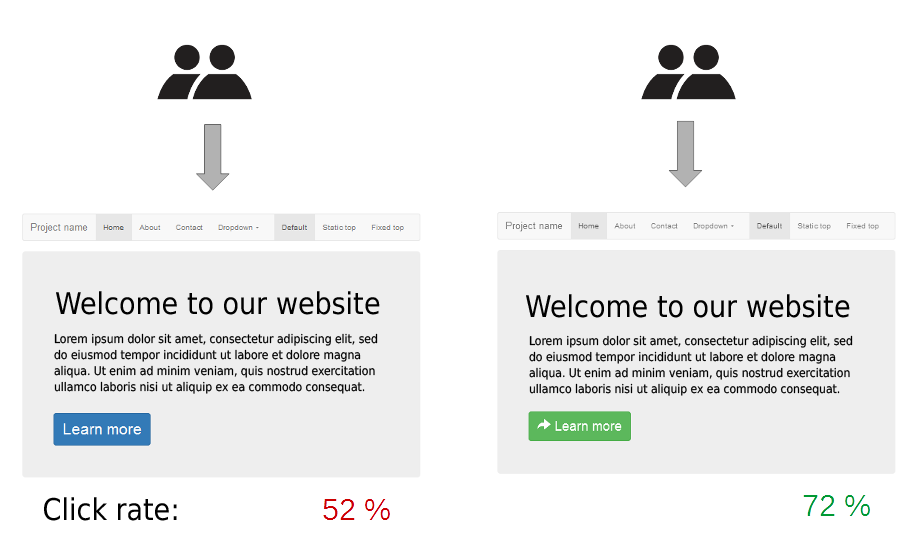

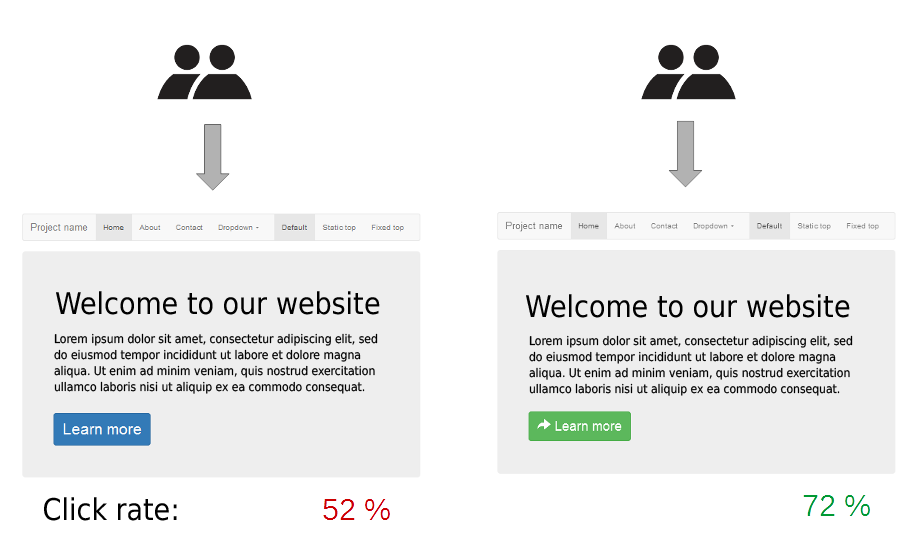

A/B testing

This is a common method for product optimization,

gaining wide adoption in user experience design.

A/B testing is characterized by several key features,

namely, experimentation with two product variants,

and an objective measure of success.

Users are often not aware of the variation in "treatment,"

so not all users of DS might be appropriate for testing,

i.e., don't A/B treat executives for a business decision.

But underlying models might be compared this way

if DS is not sandboxed from the public in product development,

most likely post-prototyping, in an optimization stage,

e.g., product recommend by two diff. models in a live system.

Recap

Data science projects are prone to failure or non-adoption,

but design-thinking strategies can help to avoid hazards.

Knowing the audience and their needs before getting in deep

is one of the surest recipes for success,

along with experimental iteration, incremental improvement,

and collaborative optimization with UX designers.

- Next time: Summary

-

putting a broad discipline together

-

walking through a complete data science pipeline