Acquisition

Jake Ryland Williams

Assistant Professor

Department of Information Science

College of Computing and Informatics

Drexel University

Some themes

Data comes from closed and open sources,

but if it is on the internet you can probably get it.

Data can usually be acquired with creativity,

and is produced with often unforseen ethical implications.

Take care with data licenses and read the fine print.

Where to find data?

- Let's consider how data might be collected:

-

surveying with direct questions,

-

sensing phenomena as they occur, and

-

sampling controlled materials.

Surveys

When surveying, researchers can ask specific questions.

Respondent recorded surveys can be written or electronic,

and interviewed surveys can be face to face or over the phone.

There's determining the right number of respondents,

and if they accurately represent the target population.

In addition, question formation requires extreme care.

- For example, questions might be

-

objective or subjective,

-

closed-ended or open-ended,

-

respondent leading, or

-

loaded with implicit assumptions.

Interviewer-led surveys

On phone or in person—interviews and consultations

While these surveys offer lots of interviewer control,

they are hindered by cost, scalability and interviewer ability.

- Some benefits:

-

can capture emotions, behaviors, and physical cues

-

offer accurate screening

-

interviewer has control and can maintain focus

- Some drawbacks:

-

expensive to run

-

slow to establish

-

difficult to scale

-

subject to interviewer biases

Distributed surveys

Whether distributed as a hard copy or an electronic form,

these types often have a more rigid structure, like

the census, product ratings, Mechanical Turk, or SurveyMonkey.

- Some benefits:

-

scalable (especially electronic)

-

fast completion (especially electronic)

-

anonymity for respondents

-

affordable to operate

- Some drawbacks:

-

lack of control (especially electronic)

-

format restrictions

-

loss of data quality

-

respondents may push through for profit

Sampling

Let's think of sampling as a punctuated collection process,

where an object is removed from its source for measurement.

- Some examples:

-

bone for radio-carbon dating

-

rock for mass-spectrometry compositions

-

blood for typing and chemical analysis

-

hair follicles for gene-sequencing

-

ice-cores for climate records

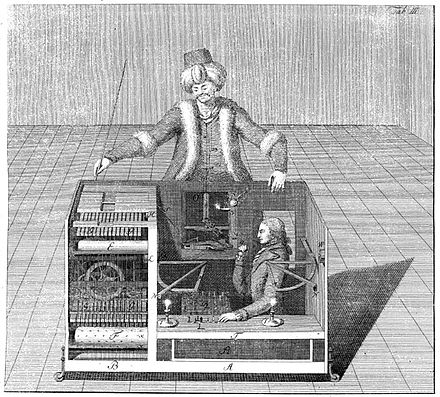

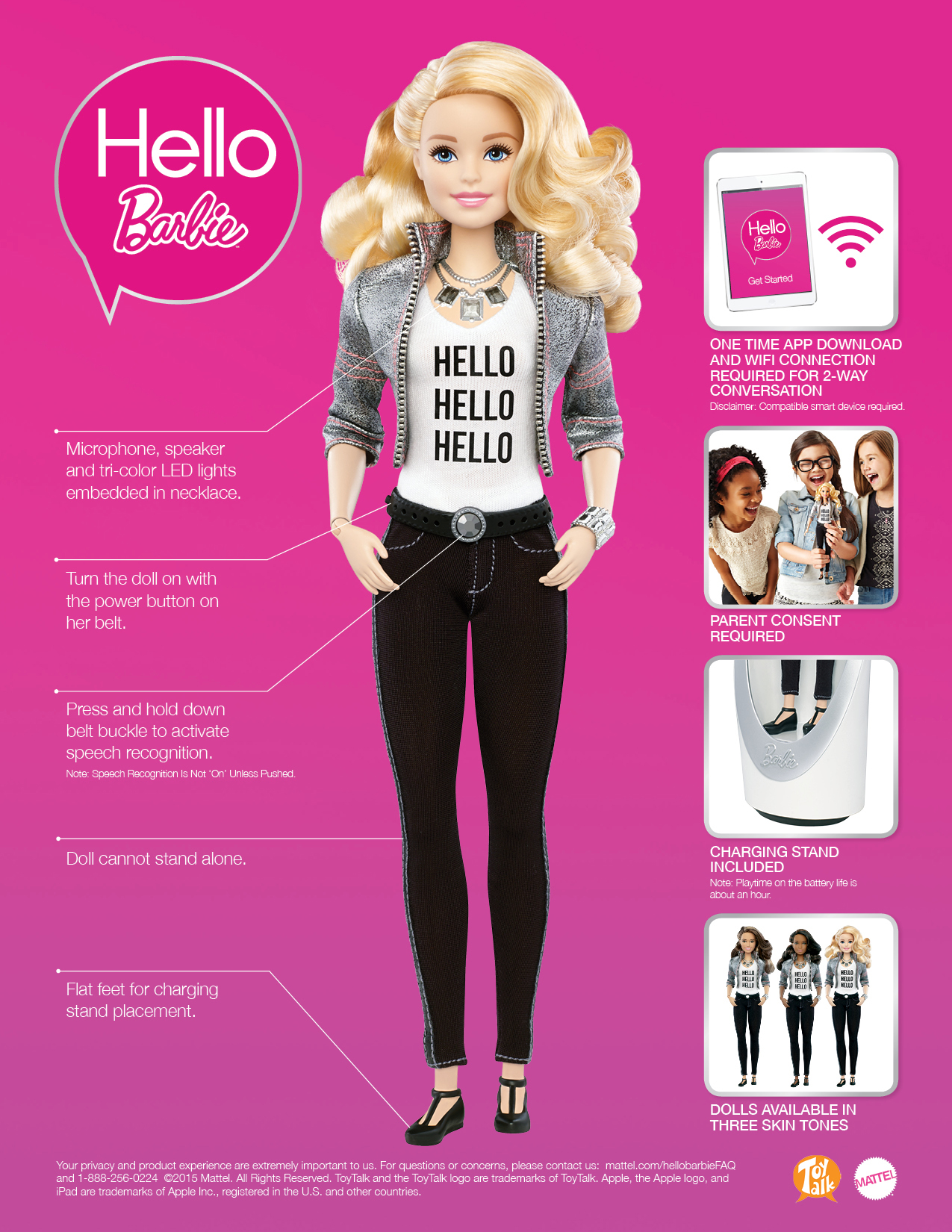

Sensors

Let's think of sensors as passive data collectors

that capture data in live, operational systems.

Here, the great callenge is setting up a sensor system,

and often, the value of data collected is found secondarily.

I.e., a system must exist before its data can be accessed.

- Let's list a few more sources:

-

thermometers, barometers, and altimeters on balloons

-

microphones and cameras in buildings

-

voltmeters in grids

-

call log recording of cell phones

-

blog posting in social media

-

heart rate monitors on watches

-

conversations with "Hello Barbie"

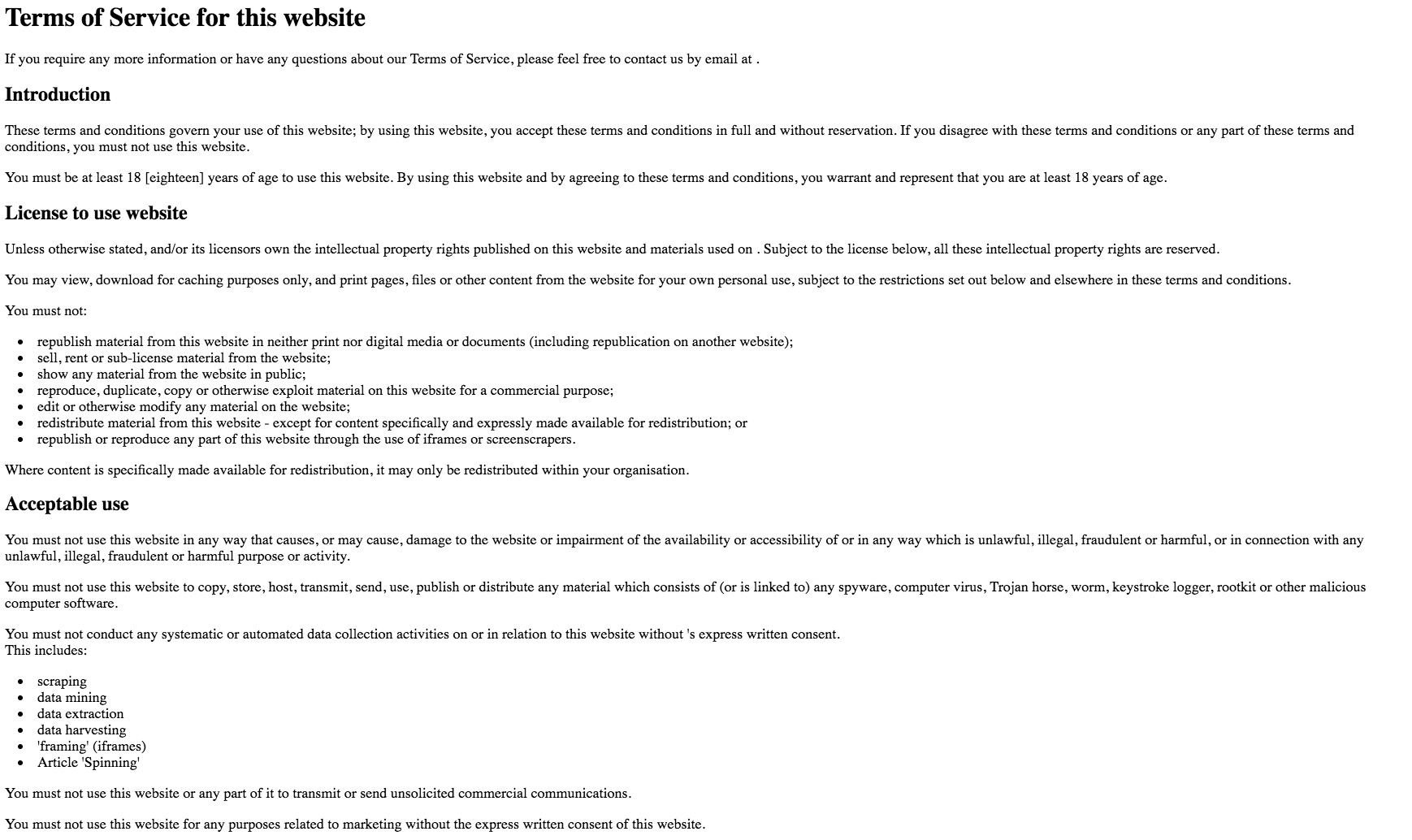

Data access, privacy, and sharing

Depending on the source, access to data is highly variable.

If you collect the data, is it yours to keep and share?

What about if you produce the data?

While academic human subjects research is more controlled,

through Institutional Review Boards (IRBs) certifications,

data generated through commercial is more variant,

with consent and rights waived through terms of service.

Controlled data and APIs

So much electronic data is commercially collected or generated.

If you're the company, then great! You have access.

If you're interested, but not the company, there are hoops.

In fact, companies often release small amounts of data,

oftentimes through an application programming interface (API).

- What's an API (thanks, Wikipedia)?

-

In computer programming, an application programming interface (API)

is a set of subroutine definitions, protocols, and tools for building software and applications.

APIs

That was a technical definition.

APIs provide data access for programming development.

Companies may want you to use their data

(if only in a limited way).

E.g., Twitter data applications engage their community.

For development, their API provides 1% public access.

How do you get access to more data? Money!

Well... Twitter also has data grants, but

to access samples, one writes code to make "API requests."

APIs also aid programming, like openCL for GPU processing.

Scraping electronic data

This probably falls into our data-sampling category.

Lots of data is just sitting, artifactually, on the Internet,

e.g., any website, hosting photos, videos, or text.

There, photos and videos are files in a nearby directory,

and scaping is the act of downloading the html and files.

This is all your browser does when you visit a site,

but beware of policies and terms of service.

- Some data scraping utilities/methods:

-

Python: Beautiful Soup (module)

-

R: rvest (module)

-

Command line: "wget" (linux command)

-

GUI: point and click (human operation)

Libraries

Librarys are not just about books.

Collections include data sets, and affiliates gain access.

This also includes institutional access to private websites.

For example, Drexel has access to the Associated Press images.

Learn how to use the library website.

If something is not there, but could be, then ask a librarian!

Open data access

Not all data is restricted.

Publicly-accessible data is called "open data."

E.g., Wikipedia, Project Gutenberg, or the Internet Archive.

This concept is closely related to open-access software,

which has led to large scientific and commercial growth

through the impact of programming languages and modules.

What data should be open and available?

While PM voting records should probably be open,

personal data, like medical records, should probably be closed.

Required reading: Arguments for and against open data

Data processing challenges

Open data has big potential for advancing problems forward.

Data is often opened to cultivate research around a problem.

Workshops sometimes hold "shared tasks" and release data.

Every year, Yelp! has a open Dataset Challenge.

- Kaggle is a website devoted to data challenges.

-

It is a great place to get into data science,

-

where companies release data, and post a reward.

-

Sometimes the reward is big money,

-

and other times it is an awesome job!

Recap

Data comes from closed and open sources,

but if it is on the internet you can probably get it.

Data can usually be acquired with creativity,

and is produced with often unforseen ethical implications.

Take care with data licenses and read the fine print.

- Next time: Pre-processing

-

What is data munging?

-

What makes data high quality?

-

How can data be cleaned?