"Big Data"

Department of Information Science

College of Computing and Informatics

Drexel University

Some common themes

What is "Big data?"

- Really, what's going on is:

- potential insights are a big deal, but

- effective utilization poses a big problem.

Required reading

> Who coined the term "Big data?"The three Vs of Big data

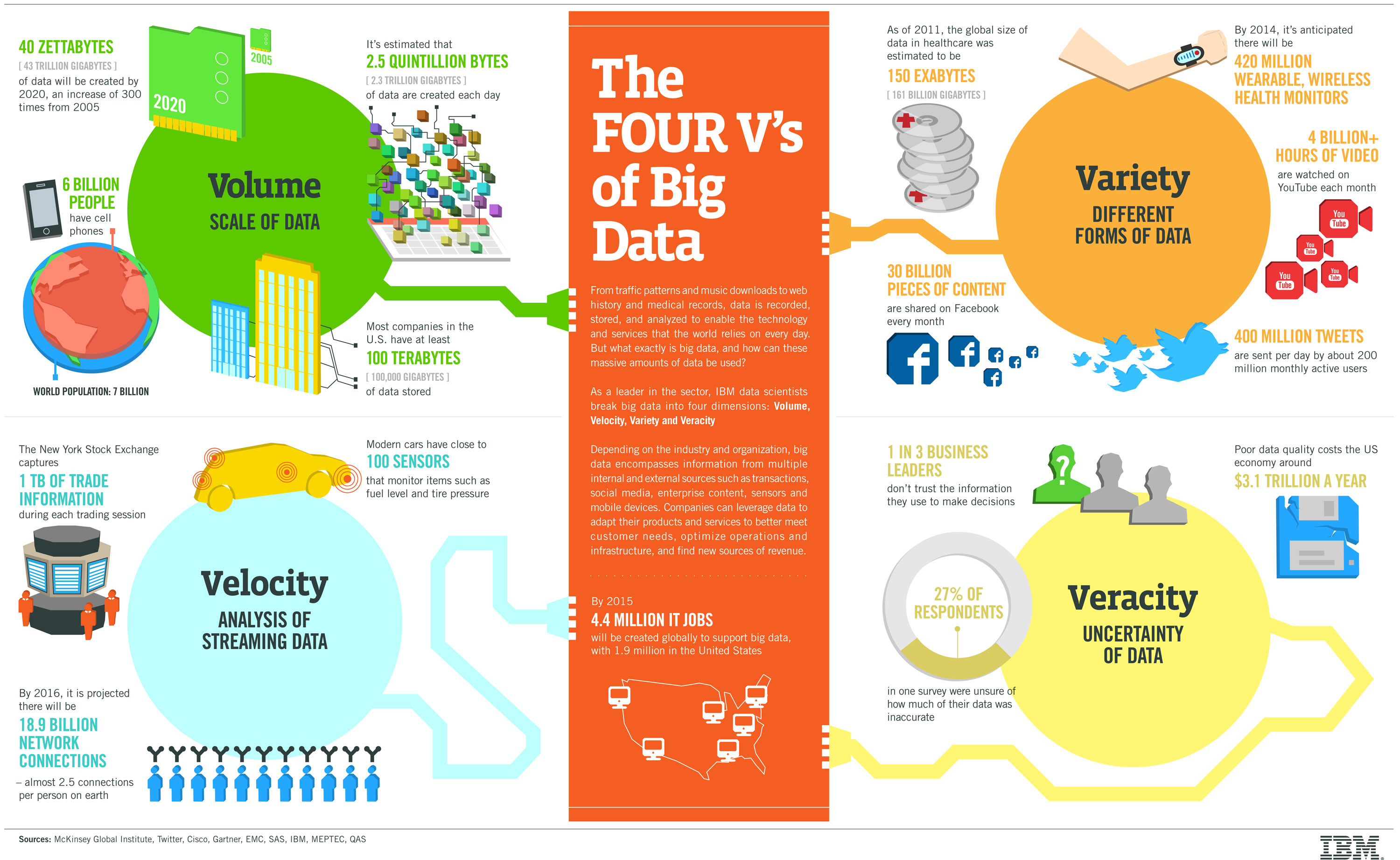

- In 2001, Doug Laney succinctly described big data by 3 Vs:

- Volume: The overall size of data

- Velocity: The rate at which new data emerges

- Variety: The differences in forms of data

Required reading:

The original 3 Vs of big data

The 3+ Vs of big data

- Some other Vs:

- Veracity: The uncertainties of data constitution

- Value: The usefulness of data

- Validity: The quality or trueness of data

- Variability: The changing nature of data

- Visualization: The visually-descriptive power of data

- Vagueness: Confusion over the meaning of big data

- Vocabulary: Structure metadata that provide context

Required reading:

Avoiding that "wanna-V" confusion

Maybe 4 Vs of big data?

- Some thoughts:

- The Vs are only a cute mnemonic for description.

- Any "keepers" should be distinct

- and should be data-intrinsic descriptions.

- This is probably why Veracity has had some staying power.

Volume

- At what size might data be big?

- One computer/drive/connection can't process/store/send it all?

- Coverage approaches the whole population?

Velocity

- At what rate might data be big?

- Equipment can only process 1/10 records in real time.

- An analysis informs of something before the news.

All of the Tweets for US

- Required readings:

Variety

- At what heterogeneity might data be big?

- An analysis combines a different model for 10 types of data.

- A comprehensive analysis identifies a combined effect.

Veracity

- At what state of disorder might data be big?

- Data are completely unstructured.

- Data captures insight from all levels of participation.

Recap

- Next time: Big data technologies

- How is big data stored?

- How is big data processed?